TensorFlow

給所有人的深度學習入門:直觀理解神經網路與線性代數

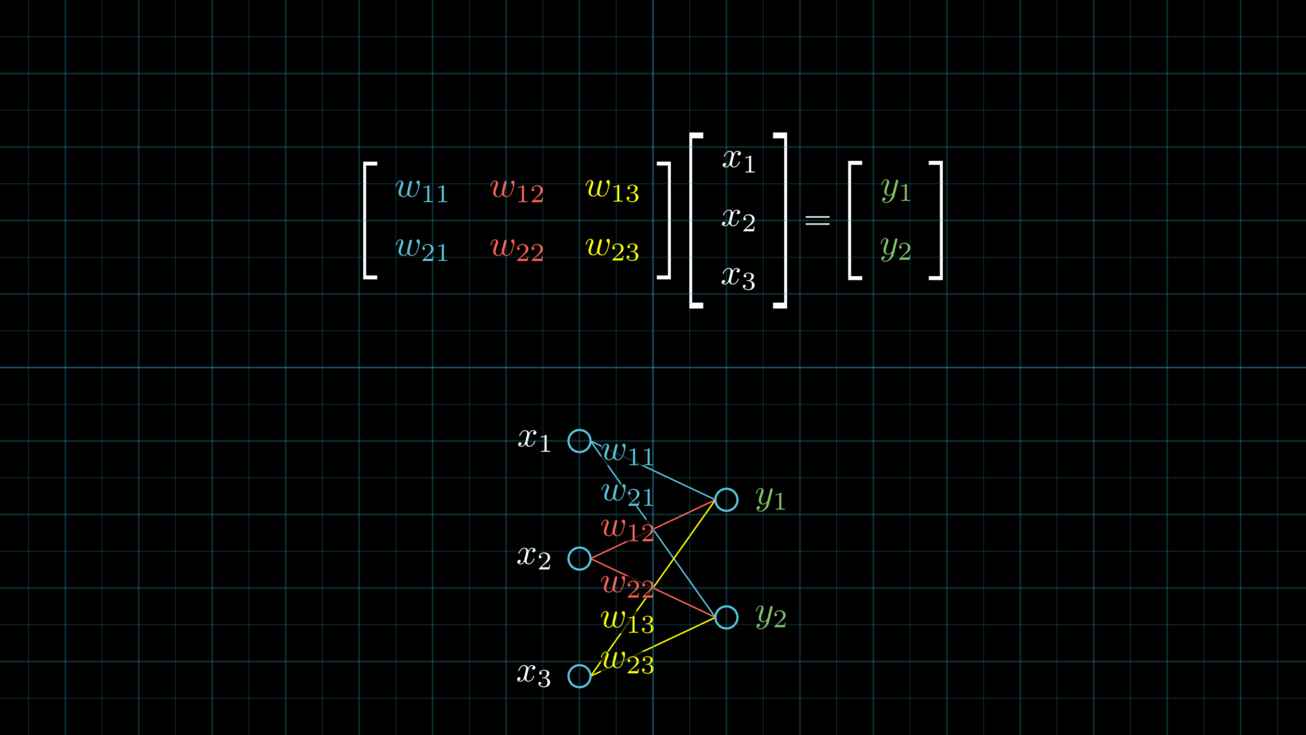

這是篇透過大量動畫幫助你直觀理解神經網路的科普文。我們將介紹基礎的神經網路與線性代數概念,以及兩者之間的緊密關係。我們也將實際透過神經網路解決二元分類任務,了解神經網路的運作原理。讀完本文,你將能夠深刻地體會神經網路與線性代數之間的緊密關係,奠定 AI 之旅的基礎。

淺談神經機器翻譯 & 用 Transformer 與 TensorFlow 2 英翻中

本文分為兩大部分。前半將帶讀者簡單回顧 Seq2Seq 模型、自注意力機制以及 Transformer 等近年在機器翻譯領域裡頭的重要發展與概念;後半段則將帶著讀者實作一個可以將英文句子翻譯成中文的 Transformer。透過瞭解其背後運作原理,讀者將能把類似的概念應用到如圖像描述、閱讀理解以及語音辨識等各式各樣的機器學習任務之上。

用 CartoonGAN 及 TensorFlow 2 生成新海誠與宮崎駿動畫

本文展示 3 種可以讓你馬上運用 CartoonGAN 來生成動漫的方法。其中包含了我們的 Github 專案、TensorFlow.js 應用以及一個事先為你準備好的 Colab 筆記本。有興趣的同學還可學習如何利用 TensorFlow 2.0 來訓練自己的專屬 CartoonGAN。

讓 AI 寫點金庸:如何用 TensorFlow 2.0 及 TensorFlow.js 寫天龍八部

這篇文章展示一個由 TensorFlow 2.0 以及 TensorFlow.js 實現的文本生成應用。本文也會透過深度學習專案常見的 7 個步驟,帶領讀者一步步了解如何實現一個這樣的應用。閱讀完本文,你將對開發 AI 應用的流程有些基礎的了解。

Find Word Semantic by Using Word2vec in TensorFlow

Naive Word2vec implementation using Tensorflow

Simple Convolutional Neural Network using TensorFlow

The goal here is to practice building convolutional neural networks to classify notMNIST characters using TensorFlow. As image size become bigger and bigger, it become unpractical to train fully-connected NN because there will be just too many parameters and thus the model will overfit very soon. And CNN solve this problem by weight sharing. We will start by building a CNN with two convolutional layers connected by a fully connected layer and then try also pooling layer and other thing to improve the model performance.

Regularization for Multi-layer Neural Networks in Tensorflow

The goal of this assignment is to explore regularization techniques.

Using TensorFlow to Train a Shallow NN with Stochastic Gradient Descent

The goal here is to progressively train deeper and more accurate models using TensorFlow. We will first load the notMNIST dataset which we have done data cleaning. For the classification problem, we will first train two logistic regression models use simple gradient descent, stochastic gradient descent (SGD) respectively for optimization to see the difference between these optimizers.